Chapter 3. Building a Distributed Agent

Systems Engineering for Agentic Applications

Read the chapter at Systems Engineering for Agentic Applications

This chapter covers

From the terminal to the cloud

Distributed computation

Distributed Async Await

The Research Agent

When you build an agentic application that runs on your machine, you enjoy the simplicity of building a local application. When you build a distributed agentic application, you face the challenges of building a distributed application. This chasm separates proof of concept from production system.

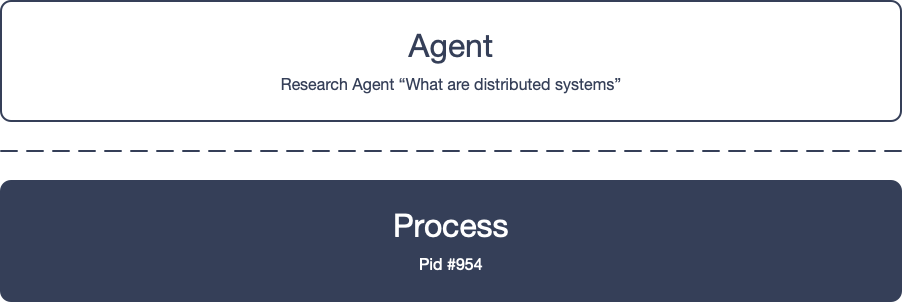

In Chapter 2, we built the Desktop Warrior, a local agent living in a single process. That process boundary quietly solved some of the hardest problems in systems design: identity, continuity in time and space, state (memory), and failure. The process was the agent. We never had to ask

“Which Desktop Warrior?” because the agent instance was identified by the process. We never had to wonder “What does Desktop Warrior remember?” because the agent instance’s memory was contained in the process’s memory.

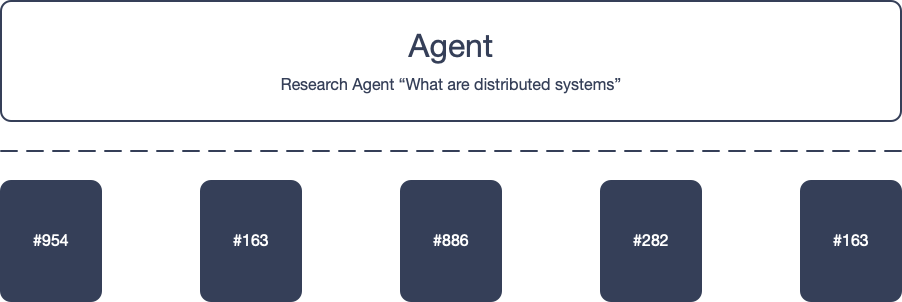

When we move from local to global, that boundary disappears. One logical agent instance may span many physical processes across time and space. What felt continuous becomes fragmented. What felt durable becomes ephemeral. What was implicit becomes explicit. What was free needs to be engineered.

In this chapter, we’ll build The Research Agent—a distributed, recursive agent that breaks a research topic into subtopics, researches each subtopic recursively, and synthesizes the results. Recursion is key: one agent instance spawns additional instances, creating a sprawling multi-agent system. From a deceptively simple code base, we’ll uncover the core challenges of distributed computation, coordination, and recovery (see Figure 3.1).

Concurrent and Distributed Systems in a Nutshell

The defining characteristic of concurrency is partial order. In a concurrent system, we do not know what will happen next. To mitigate concurrency, we employ coordination. Coordination refers to constraining possible schedules to desirable schedules, while synchronization refers to enforcing that constraint. The fundamental operation of synchronization is to wait.

E₁ | E₂ ≡ (E₁ ; E₂) ∨ (E₂ ; E₁)Ideally, two executions that run concurrently should produce the same result as running one after the other

The defining characteristic of distribution is partial failure. In a distributed system, we do not know what will fail next. To mitigate distribution, we employ recovery. Recovery refers to extending partial schedules to complete schedules, while supervision refers to enforcing that extension. The fundamental operation of supervision is to restart.

E | ⚡️ ≡ EIdeally, a process that may fail should produce the same result as running to completion

3.1 Moving from the Terminal to the Cloud

Running applications locally is a joy. My laptop rarely restarts, my terminal window never crashes, and my terminal tabs stay open for days, sometimes weeks. The process running in that tab is still there, faithfully executing or patiently waiting for the next input, ready to continue as if no time has passed. Sure, in theory, the process could crash at any moment, but in practice, we hardly think about that possibility. Failure is an edge case, not the norm (see Figure 3.2).

Distributed systems shatter this simplicity. Your application no longer lives in one process but spans many processes across machines, data centers, and continents, executing over minutes, hours, days, or weeks. Failure is no longer an edge case but becomes the regular case when processes crash and networks partition (see Figure 3.3).

So before we build the Research Agent, we need a model of computations that spans space and time.

Read the rest of this chapter at Systems Engineering for Agentic Applications