Read the chapter at Systems Engineering for Agentic Applications

This chapter covers

Agents and Environments

Goals and plans

Autonomy and Alignment

Context Engineering

The Desktop Warrior

Your desktop is spotless. Completely empty, adorned by a carefully curated wallpaper highlighting your personality. Except for one folder: “Desktop”. Inside Desktop, digital chaos reigns: old screenshots, old documents, hastily cloned github repos, never deleted. And, of course, another folder called “Desktop”. The battle for order was lost long ago to Desktop’s recursion depth.

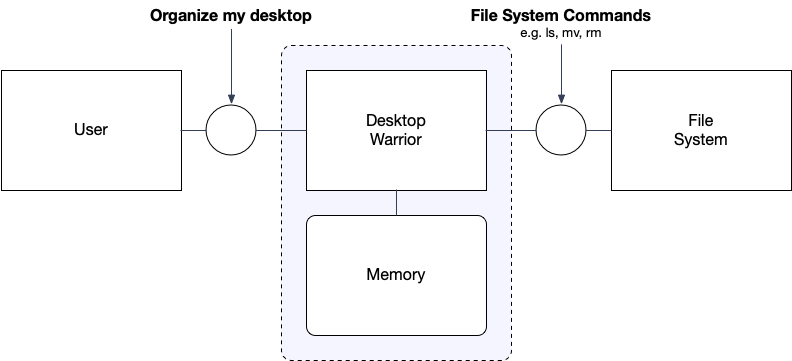

In this chapter, we’ll build a local AI agent to fight that battle. This Desktop Warrior is simple enough to follow, yet complex enough to reveal the patterns we will encounter when building ambitious, distributed agentic applications.

2.1 Agents in their Environments

Systems engineering depends on accurate and concise mental models to reason about complex systems. Before we tackle the Desktop Warrior, we need the mental models to reason rigorously about abstract concepts like autonomy and alignment, as well as fuzzy concepts like prompt engineering.

Uninterpreted Functions

Complex systems often contain aspects that resist precise definition. When does an agent make the best decision? When is a desktop well organized? How can we reason rigorously about systems when key concepts remain undefined?

Throughout this chapter, we make use of *uninterpreted functions*—functions with defined interfaces but unspecified implementations. We will use two types of uninterpreted functions: one that maps its arguments onto a boolean value, and another that maps its arguments onto a numerical value. Boolean values answer simple yes or no questions, while numerical values allow us to compare different outcomes.

For example, to express preferences, we simply postulate a fitness function `U` that maps inputs to numerical values, where higher values indicate better outcomes:

Preference—If

Umaps a desktop without screenshots to a higher value than a desktop with screenshots, then a desktop without screenshots is preferable.U(Desktop[without screenshots]) > U(Desktop[with screenshots])Equivalence—If

Umaps a desktop without screenshots to the same value as a desktop with screenshots, then they are equivalent.U(Desktop[without screenshots]) = U(Desktop[with screenshots])By separating the interface (what we need for reasoning) from the implementation (details we may not know or care about), we concentrate all uncertainty into

U. This makes the rest of our model crisp: we can reason rigorously about agent behavior without defining what “better” actually means.

2.1.1 Agents

An agent is a component that operates autonomously in its environment in pursuit of an objective. We define an agent 𝒜 as a tuple of a model M, a set of tools T, and a system prompt s:

𝒜 = (M, T, s)We define an agent instance A as an agent 𝒜 in this context, also called the configuration, extended with history h:

A = (M, T, s, h)The history h, also called the trajectory or trace of an agent instance, is the sequence of prompts p and replies r:

h = [(p₁, r₁), (p₂, r₂), ...]If we want to be more concrete, we refine this generic notation: If the prompt comes from a user, we write u. If the reply is an answer to the user, we write a. Thus a conversation history between user and agent instance looks like:

h = [(u₁, a₁), (u₂, a₂), ...]If tools are involved, the history expands: If the agent instance issues a tool call, we write t. If the tool call returns a result, we write o. Thus a conversation history involving tools looks like:

h = [(u₁, t₁), (o₁, a₁), ...]This layered notation lets us zoom in or out: at the highest level, p, r suffice to reason abstractly about agent instance trajectories; at the concrete level, u, a, t, o clarifies who is speaking and to whom.

Agent Evolution and Identity

Agent instances evolve by accepting a prompt and generating a response (here we model the generation of r and appending (p, r) as an atomic step, but of course we could separate the steps if desired):

generate : A₁ = (M, T, s, h) × p → A₂ = (M, T, s, h + [(p, r)]) × rOf course, we are not limited to generation. Since an agent instance is just a tuple of model, tools, system prompt, and history, we can alter any aspect of the agent instance. For example, we could reset the history:

redefine : A₁ = (M, T, s, h) × h := [] → A₂ = (M, T, s, [])In everyday conversation, we speak of “the agent” as if the agent instance was a persistent, continuous entity, evolving over time—like talking to one person across multiple interactions. However, formally, every interaction (generation or redefinition) creates a new agent instance. For example, generate accepts A₁ and yields A₂ with an extended history.

The result is a fundamental tension: our intuition suggests a single continuous agent instance, while the formal model suggests multiple discrete agent instances.

Read the rest of this chapter at Systems Engineering for Agentic Applications